Content Authenticity and Media Provenance

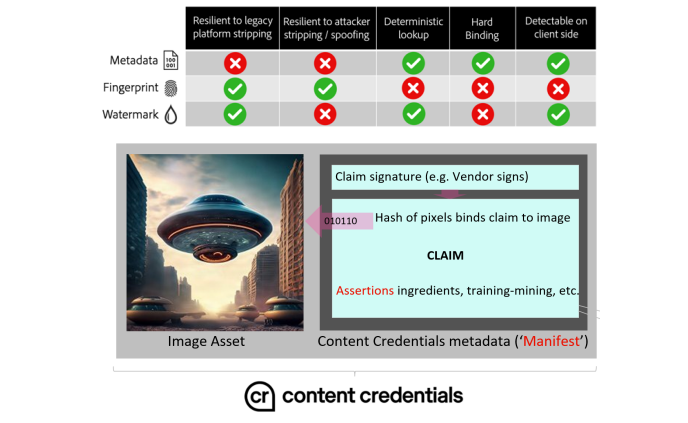

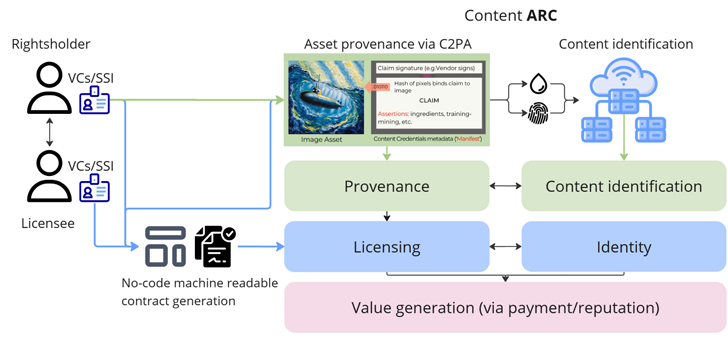

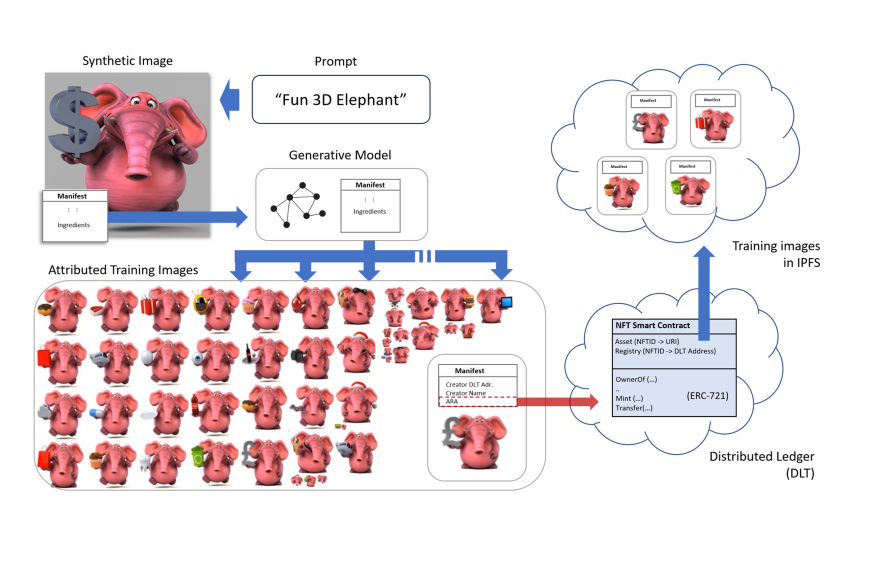

Our group addresses the societal threat of fake news and misinformation. While many researchers focus on deepfake detection, not all AI-generated content is deceptive, and building detectors is an arms race with GenAI. Human rights organizations note that most visual misinformation is not AI-generated but genuine content miscontextualized or misattributed. For this reason, we see media provenance as crucial—enabling users to trace content origins and make informed trust decisions. My research develops technologies for provenance (secure metadata, watermarking, fingerprinting, distributed ledgers) and contributes to international standards such as C2PA for communicating provenance.

ARCHANGEL (2017-19) was one of my earliest media provenance projects, working with National Archives around the world to help tamper-proof content by storing visual provenance information on blockchain. This work was highlighted by UKRI/EPSRC as a highlight of its 10 year Digital Economy research portfolio. Other projects included TAPESTRY (identity provenance, 2016-2018) and CoMEHeRe (an early exploration of healthcare data monetization based on provenance, 2017-2019).

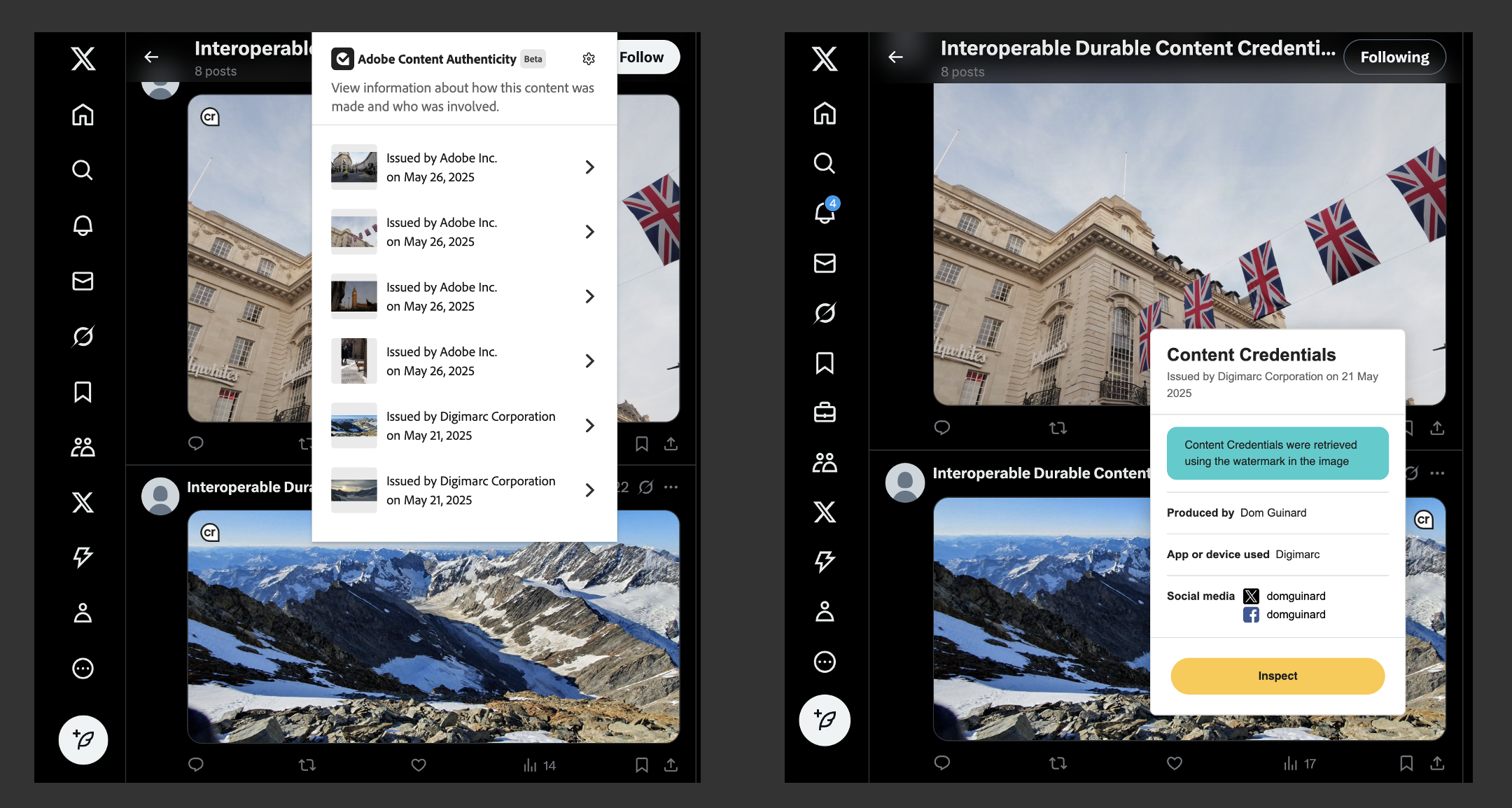

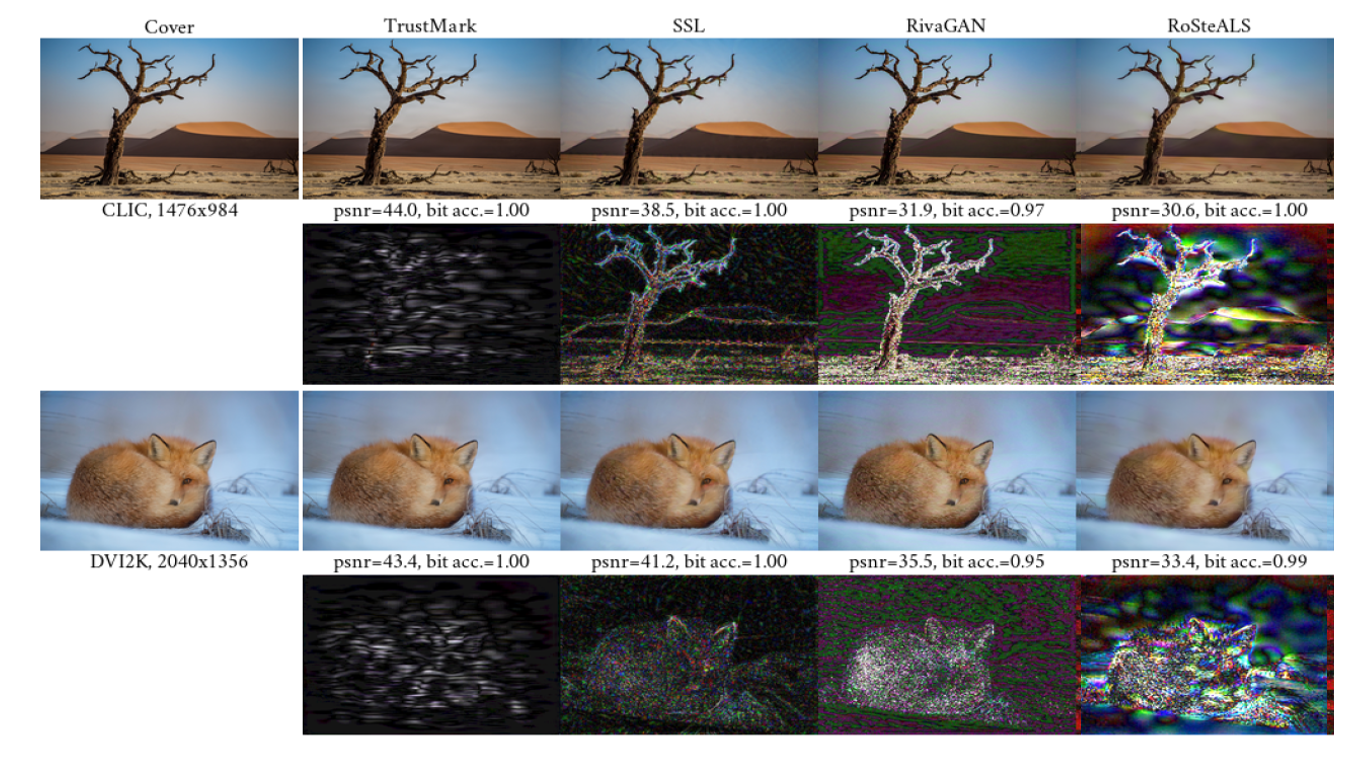

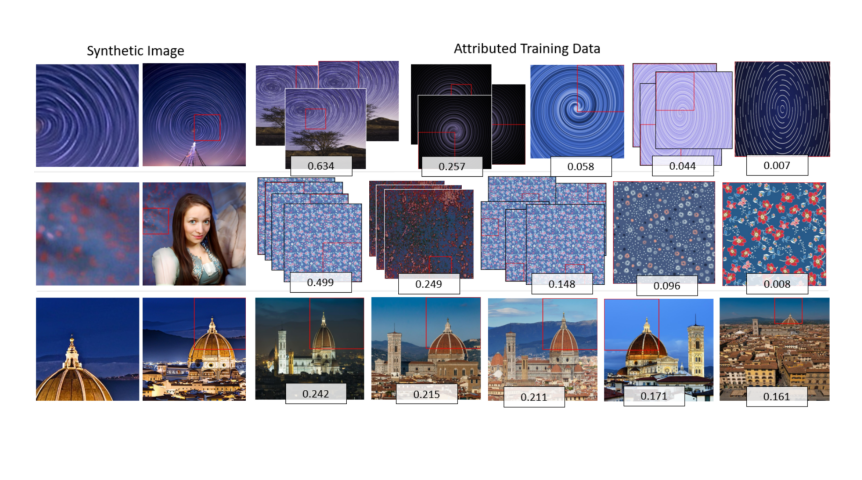

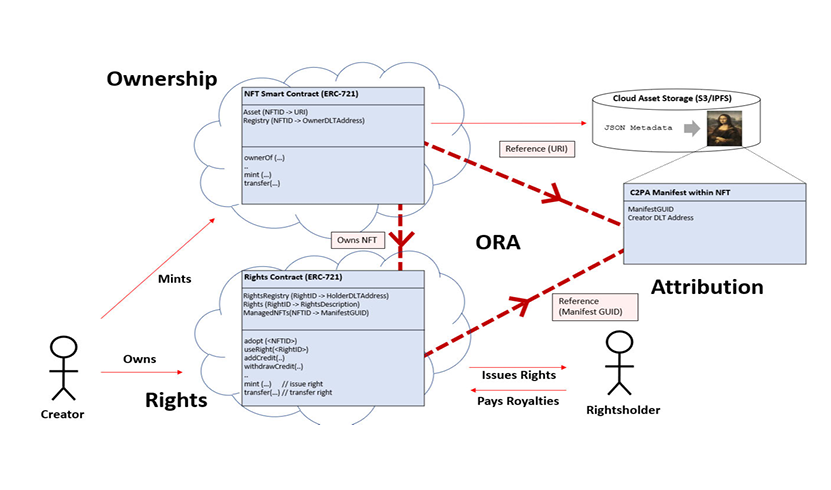

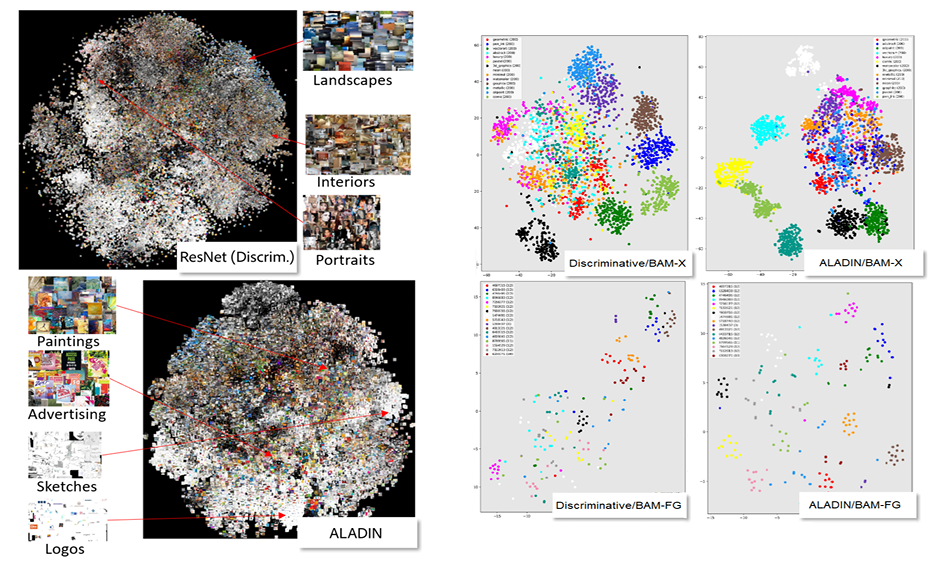

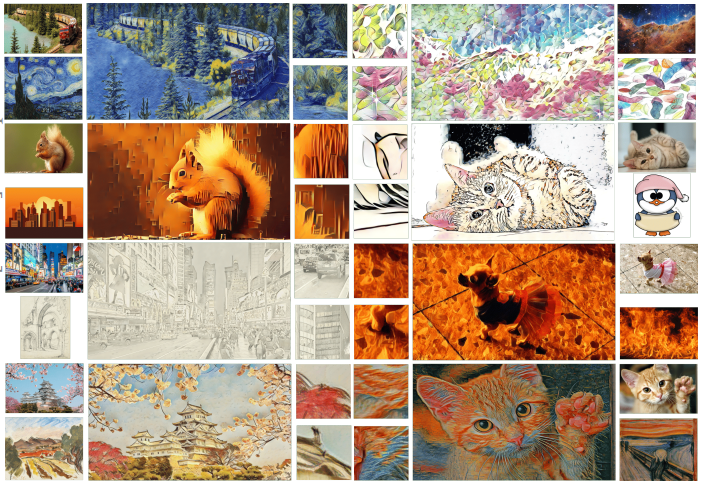

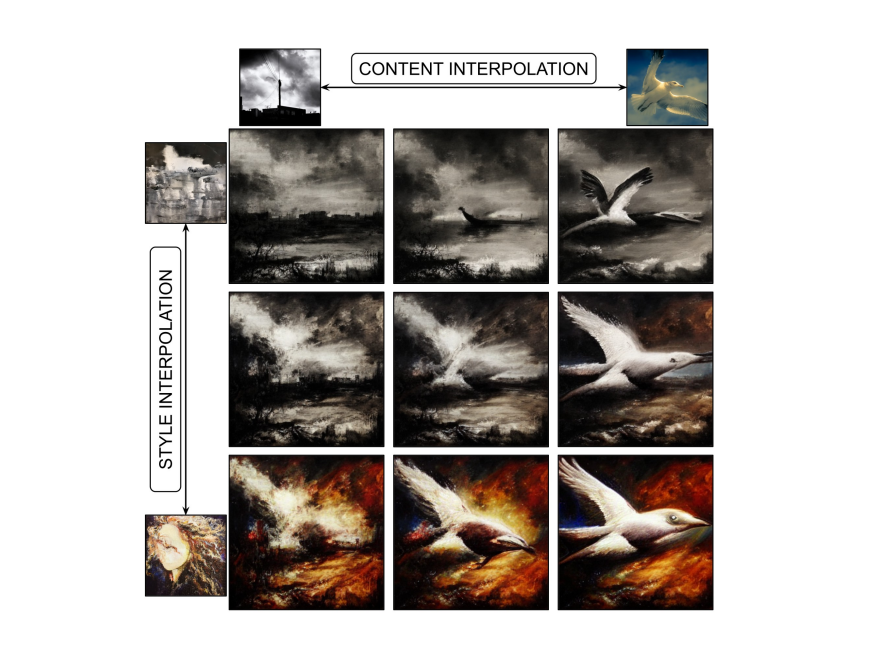

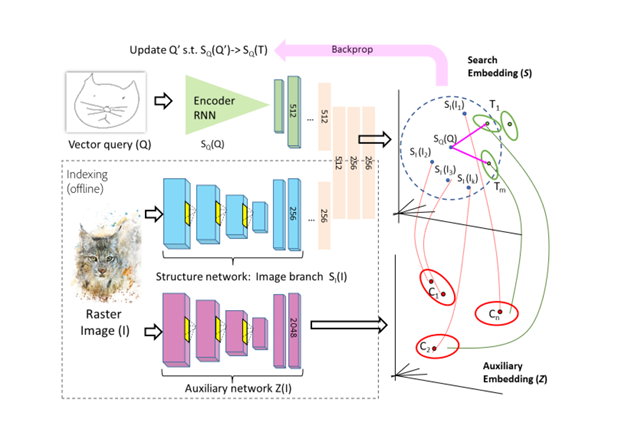

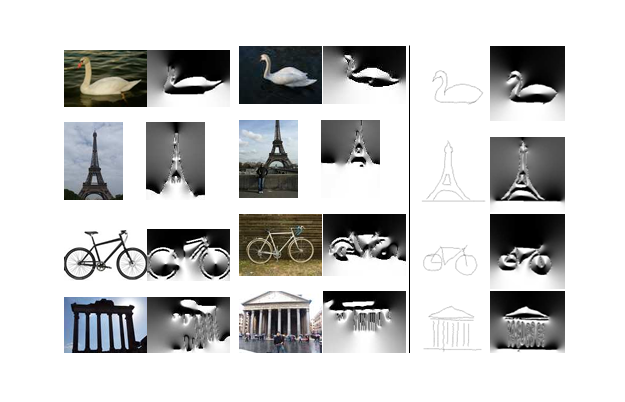

Since joining Adobe Research in 2019, I co-founded the Content Authenticity Initiative (CAI), an industry coalition now exceeding 5000 members (2025). CAI's technical framework evolved into a cross-industry standard (C2PA; Coalition for Content Provenance and Authenticity). I have been involved in the C2PA technical working group from the outset, chairing two task forces focused upon watermarking and blockchain. These technologies enable C2PA metadata embedded within assets (referred to as Content Credentials) to become more durable i.e. survive content redistribution, particularly through social media platforms that strip metadata. Our joint Adobe–Surrey work has produced perceptual fingerprinting [e.g. OscarNet, ICCV 2021; ICN CVPRW 2021], open-source watermarking [e.g. RoSteALS, CVPRW 2023; TrustMark, ICCV 2025], methods to summarize provenance [e.g. VIXEN, ICCV 2023; ImProvShow, BMVC 2025], and studies its value to users [ACM C&C 2025].

I have been involved in policy and regulatory discussions around misinformation and online harms. As part of the Royal Society Pairing Scheme, I embedded within the UK Department of Science Innovaton and Technology (DSIT) disinformation team and have also presented to DARPA, the US National Academy of Science and the European Commission among others to help inform policymakers about the emerging technical landscape around media provenance. Our reseach papers are often cited by public bodies reporting in the media authenticity space e.g. the NSA/NCSC and World Privacy Forum reports on C2PA.

Sample of relevant publications

“TrustMark: Robust Watermarking and Watermark Removal for Arbitrary Resolution Images”

T. Bui, S. Agarwal, J. Collomosse

IEEE International Conference on Computer Vision (ICCV), 2025

pdf

bib

“To Authenticity, and Beyond! Building Safe and Fair Generative AI upon the Three Pillars of Provenance”

J. Collomosse, A. Parsons

IEEE Computer Graphics and Applications (IEEE CG&A), 2024

pdf

bib

“ImProvShow: Multimodal Fusion for Image Provenance Summarization”

A. Black, J. Shi, Y. Fan, J. Collomosse

British Machine Vision Conference (BMVC), 2025

pdf

bib

“RoSteALS: Robust Steganography using Autoencoder Latent Space”

T. Bui, S. Agarwal, N. Yu, J. Collomosse

CVPR Workshop on Media Forensics (CVPRW), 2023

pdf

bib

“Content Authenticities: A Discussion on the Values of Provenance Data for Creatives and Their Audiences”

C. Moruzzi, E. Tallyn, F. Liddell, B. Dixon, J. Collomosse, C. Elsden

ACM Creativity and Cognition (C&C), 2025

pdf

bib

“OSCAR-Net: Object-centric Scene Graph Attention for Image Attribution”

E. Nguyen, T. Bui, V. Swaminathan, J. Collomosse

IEEE International Conference on Computer Vision (ICCV), 2021

pdf

bib